- Getting started

- Best practices

- Tenant

- About the Tenant Context

- Searching for Resources in a Tenant

- Managing Robots

- Connecting Robots to Orchestrator

- Storing Robot Credentials in CyberArk

- Storing Unattended Robot Passwords in Azure Key Vault (read only)

- Storing Unattended Robot Credentials in HashiCorp Vault (read only)

- Storing Unattended Robot Credentials in AWS Secrets Manager (read only)

- Deleting Disconnected and Unresponsive Unattended Sessions

- Robot Authentication

- Robot Authentication With Client Credentials

- Configuring automation capabilities

- Audit

- Settings

- Cloud robots

- Folders Context

- Automations

- Processes

- Jobs

- Apps

- Triggers

- Logs

- Monitoring

- Queues

- Assets

- Storage Buckets

- Orchestrator testing

- Resource Catalog Service

- Authentication

- Integrations

- Classic Robots

- Troubleshooting

Orchestrator user guide

Jobs

A job represents the execution of a process on UiPath Robot. You can launch the execution of a job in either attended or unattended mode. You cannot launch a job from Orchestrator on attended robots, and they cannot run under a locked screen.

|

Attended Mode |

Unattended Mode |

|---|---|

|

Automations Page > Jobs Automations Page > Triggers Automations Page > Processes |

By default, any process can be edited while having associated running or pending jobs.

- Running jobs associated with a modified process use the initial version of the process. The updated version is used for newly created jobs or at the next trigger of the same job.

- Pending jobs associated with a modified process use the updated version.

There are three possible job sources, depending on the job launching mechanism:

- Manual - the job has been started and configured from the Jobs/Triggers/Processes pages, using the Start button.

- Agent - the job has been started in attended mode from the UiPath Robot tray, UiPath Assistant, or using the Command-Line.

- [Trigger_Name] - the job has been launched through a trigger, used for preplanned job execution.

|

Description | |

|---|---|

|

Allocate Dynamically |

A foreground process is executed multiple times under the user and machine which become available first. If the user is also selected, only machine allocation is done dynamically. Background processes get executed on any user, regardless if it's busy or not, as long as you have sufficient runtimes. You can execute a process up to 10000 times. |

|

Specific Robots |

The process is executed by certain Robots, as selected from the robots list. |

On a host machine, you need to provision a Windows user for each user that belongs to the folders to which the corresponding machine template is assigned to.

Say you connected a server to Orchestrator using the key generated by the machine template, FinanceT. That machine template is assigned to folders FinanceExecution and FinanceHR, where 6 users are assigned as well. Those 6 users need to be provisioned as Windows users on the server.

If you configure a job to execute the same process multiple times, a job entry is created for each execution. The jobs are ordered based on their priority and creation time, with higher priority, older jobs being placed first in line. As soon as a robot becomes available, it executes the next job in line. Until then, the jobs remain in a pending state.

Setup

- 1 folder

- 1 machine template with two runtimes

- 2 users: john.smith and petri.ota

- 2 processes which require user interaction: P1 - which adds queue items to a queue, P2 - which processes the items in the queue

- The machine template and the users must be associated to the folder containing the processes.

Desired Outcome

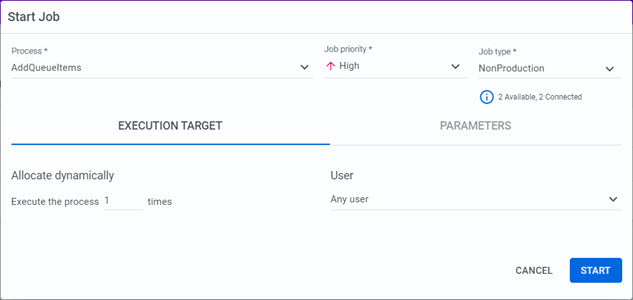

- P1 is executed with a high priority by anyone.

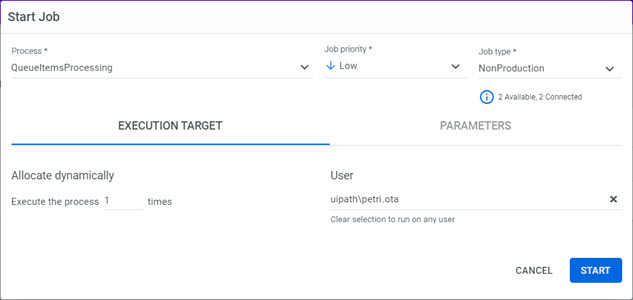

- P2 is executed with a low priority by petri.ota.

Required Job Configuration

- Start a job using P1, don't assign it to any particular user, set the priority to High.

- Start a job for P2, assign it to petri.ota, set the priority to Low.

You can control which job has precedence over other competing jobs through the Job Priority field either when deploying the process, or when configuring a job/trigger for that process. A job can have one of the following priorities: Low (↓), Normal (→), High (↑).

The priority is inherited from where it was initially configured. You can either leave it as it is or change it.

Automations Page > Jobs: Inherits the priority set at the process level.

Automations Page > Triggers: Inherits the priority set at the trigger level. If the trigger itself inherited the priority at the process level, then that one is used.

Automations Page > Processes: Uses the priority set for that process.

If you configure a job to execute the same process multiple times, a job entry is created for each execution. The jobs are ordered based on their priority and creation time, with higher priority, older jobs being placed first in line. As soon as a robot becomes available, it executes the next job in line. Until then, the jobs remain in a pending state.

The priority is set by default to Inherited, meaning it inherits the value set at the process level. Choosing a process automatically updates the arrow icon to illustrate what value has been set at the process level. Any jobs launched by the trigger have the priority set at the trigger level. If the default Inherited is kept, the jobs are launched with the priority at the process level.

There are two types of processes, according to the user interface requirements:

Background Process - Does not require a user interface, nor user intervention to get executed. For this reason, you can execute multiple such

jobs in unattended mode on the same user simultaneously. Each execution requires an Unattended/NonProduction license. Background

processes run in Session 0 when started in unattended mode.

Background Process - Does not require a user interface, nor user intervention to get executed. For this reason, you can execute multiple such

jobs in unattended mode on the same user simultaneously. Each execution requires an Unattended/NonProduction license. Background

processes run in Session 0 when started in unattended mode.

Requires User Interface - Requires user interface as the execution needs the UI to be generated, or the process contains interactive activities,

such as Click. You can only execute one such a process on a user at a time.

Requires User Interface - Requires user interface as the execution needs the UI to be generated, or the process contains interactive activities,

such as Click. You can only execute one such a process on a user at a time.

The same user can execute multiple background processes and a singular UI-requiring process at the same time.

If you start a job on multiple High-Density Robots from the same Windows Server machine, it means that the selected process is executed by each specified Robot, at the same time. An instance for each of these executions is created and displayed in the Jobs page.

If you are using High-Density Robots and did not enable RDP on that machine, each time you start a job, the following error is displayed: “A specified logon session does not exist. It may already have been terminated.”

To see how to set up your machine for High-Density Robots, please see the About Setting Up Windows Server for High-Density Robots page.

Processes that require logical fragmentation or human intervention (validations, approvals, exception handling) such as invoice processing and performance reviews, are handled with a set of instruments in the UiPath suite: a dedicated project template in Studio called Orchestration Process, actions and resource allocation capabilities in Action Center.

Broadly, you configure your workflow with a pair of activities. The workflow can be parameterized with the specifics of the execution, such that a suspended job can only be resumed if certain requirements have been met. Only after the requirements have been met, resources are allocated for job resumption, thus ensuring no waste in terms of consumption.

In Orchestrator this is marked by having the job suspended, awaiting for requirements to be met, and then having the job resumed and executed as usual. Depending on which pair you use, completion requirements change, and the Orchestrator response adjusts accordingly.

|

Activites |

Use Case |

|---|---|

|

Introduce a job condition, such as uploading queue items. After the main job has been suspended, the auxiliary job gets executed. After this process is complete, the main job is resumed. Depending on how you configured your workflow, the resumed job can make use of the data obtained from the auxiliary process execution. Note: If your workflow uses the Start Job and Get Reference activity to invoke another workflow, your Robot role should be updated with the following permissions:

|

|

Activities |

Use Case |

|---|---|

|

Introduce a queue condition, such as having queue items processed. After the main job has been suspended, the queue items need to be processed through the auxiliary job. After this process is complete, the main job is resumed. Depending on how you configured your workflow, the resumed job can make use of the output data obtained from the processed queue item. |

Form Actions

|

Activities |

Use Case |

|---|---|

|

Introduce user intervention conditions, found in UiPath Action Center as actions. After the job has been suspended, an action is generated in Action Center. Only after action completion, is the job resumed. Form actions need to be completed by the assigned user. User assignment can be handled directly in Action Center, or through the Assign Tasks activity. |

External Actions

|

Activities |

Use Case |

|---|---|

|

Introduce user intervention conditions, found in UiPath Action Center as actions. After the job has been suspended, an action is generated in Action Center. Only after task completion, is the job resumed. External actions can be completed by any user with Edit permissions on Actions, and access to the associated folder. |

Document Validation Actions

|

Activities |

Use Case |

|---|---|

|

Introduce user intervention conditions, found in UiPath Action Center as actions. After the job has been suspended, an action is generated in Action Center. Only after task completion, is the job resumed. Document Validation actions need to be completed by the assigned user. User assignment can be handled directly in Orchestrator, or through the Assign Tasks activity. In order for the Robot to upload, download an delete data from a storage bucket, it needs to be granted the appropriate permissions. This can be done by updating the Robot role with the following:

|

|

Activity |

Use Case |

|---|---|

|

Introduce a time interval as a delay, during which the workflow is suspended. After the delay has passed, the job is resumed. |

Job fragments are not restricted to being executed by the same Robot. They can be executed by any Robot that is available when the job is resumed and ready for execution. This also depends on the execution target configured when defining the job.

Example

I defined my job to be executed by specific Robots, say X, Y and Z. When I start the job only Z is available, therefore my job is executed by Z until it gets suspended awaiting user validation. After it gets validated, and the job is resumed, only X is available, therefore the job is executed by X.

- From a monitoring point of view, such a job is counted as one, regardless of being fragmented or executed by different Robots.

- Suspended jobs cannot be assigned to Robots, only resumed ones can.

To check the triggers required for the resumption of a suspended job, check the Triggers tab on the Job Details window.

For unattended faulted jobs, if your process had the Enable Recording option switched on, you can download the corresponding execution media to check the last moments of the execution before failure.

The Download Recording option is only displayed on the Jobs window if you have View permissions on Execution Media.

To upload document data:

To upload document data:

To delete document data after downloading:

To delete document data after downloading: